This is part one in a series of posts for developing on a Mac. Note the distinction between developing on ...

This is part one in a series of posts for developing on a Mac. Note the distinction between developing on ...

Growing up as a kid my family called Chex Mix “Texas Trash”. With every passing year the ingredients became more ...

In late March 2020 Apple purchased the Dark Sky app, and along with it the Dark Sky API. No longer ...

This is my first post featuring the Racket language. At some point we may start evangelizing and looking down our ...

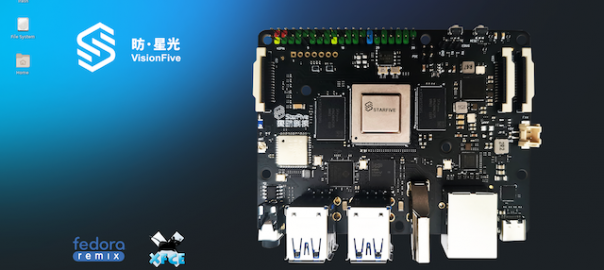

I’ve been excited to get my hands on a StarFive VisionFive and it finally arrived last week after being on ...

If you’re writing Swift code for iOS you’re most likely going to be doing so in Xcode. If you’re coding ...

It has been nearly 6 years since Apple first open sourced the Swift language and brought it to Linux. In ...

One of the reasons I took the plunge and bought an M1-based Mac is to test out its performance and ...

Apple has transitioned from different instruction set architectures several times now throughout its history. First, from 680×0 to PowerPC, then ...

From time to time you run into an issue that requires no end of Googling to sort through. That was ...