I can already hear the rabble shouting, “Why would you use GoDaddy as your domain registrar?!” A fair question, but ...

Category: DevOps

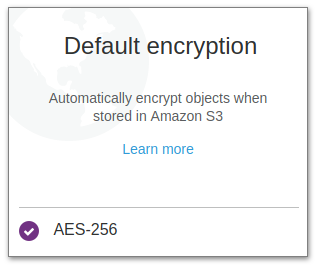

Encrypting Existing S3 BucketsEncrypting Existing S3 Buckets

Utilizing encryption everywhere, particularly in cloud environments, is a solid idea that just makes good sense. AWS S3 makes it ...

TLS 1.3 with NGINX and Ubuntu 18.04 LTSTLS 1.3 with NGINX and Ubuntu 18.04 LTS

OpenSSL 1.1.1 is now available to Ubuntu 18.04 LTS with the release of 18.04.3. This porting of OpenSSL 1.1.1 has ...

Auditing Shared Account UsageAuditing Shared Account Usage

Occasionally you find yourself in a situation where utilizing a shared account cannot be avoided. One such scenario is managing ...

A Script for Testing Membership in a Unix GroupA Script for Testing Membership in a Unix Group

Sometimes you just need a boolean test for a given question. In this post we’ll look at answering the question, ...

TLS 1.3 with NGINX and Ubuntu 18.10TLS 1.3 with NGINX and Ubuntu 18.10

TLS 1.3 is on its way to a webserver near you, but it may be a while before major sites ...

Updating Yarn’s Apt Key on UbuntuUpdating Yarn’s Apt Key on Ubuntu

If you’re one of those unfortunate souls that run into the following error when running apt update [crayon-686733b2a259f920509289/] you are ...

Leveraging Instance Size Flexibility with EC2 Reserved InstancesLeveraging Instance Size Flexibility with EC2 Reserved Instances

Determining which EC2 reserved instances to purchase in AWS can be a daunting task, especially given the fact that you’re ...

Ansible 2.7 Deprecation Warning – apt and squash_actionsAnsible 2.7 Deprecation Warning – apt and squash_actions

Ansible 2.7 was released recently and along with it brought a new deprecation warning for the apt module: [code lang=text] ...

Updating From Such a Repository Can’t Be Done SecurelyUpdating From Such a Repository Can’t Be Done Securely

I recently came across the (incredibly frustrating) error message Updating from such a repository can't be done securely while trying ...