There are times when you want to configure your website to explicitly disallow access from certain countries, or only allow ...

There are times when you want to configure your website to explicitly disallow access from certain countries, or only allow ...

As others have written about, Apple appears to be making a hash out of the ability to automate tasks on ...

There are times when not only you’ll want to have separate vault files for development, staging, and production, but when ...

In Part 5 of our series, we’ll explore provisioning users and groups with Ansible on our AWS servers. Anyone who ...

So far in our series we’ve covered some fundamental Ansible basics. So fundamental, in fact, that we really haven’t shared ...

This is the third article in a series looking at utilizing Ansible with AWS. So far the focus has been ...

In this post we’re going to look at Ansible variables and facts, but mostly at variables (because I haven’t worked ...

It’s been some time since I’ve posted to this blog, which is a shame, because I do indeed enjoy writing ...

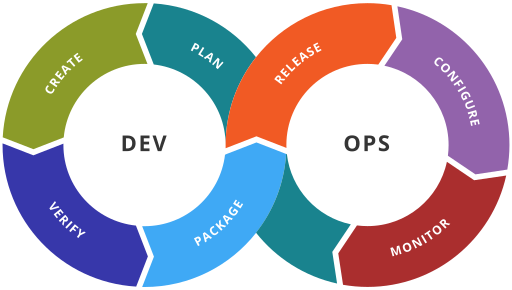

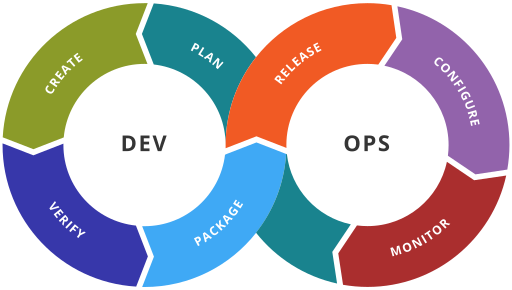

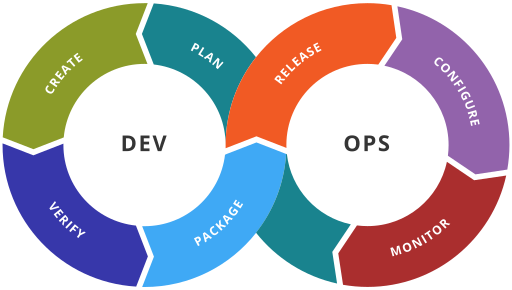

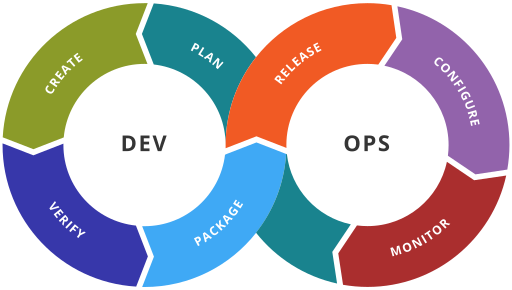

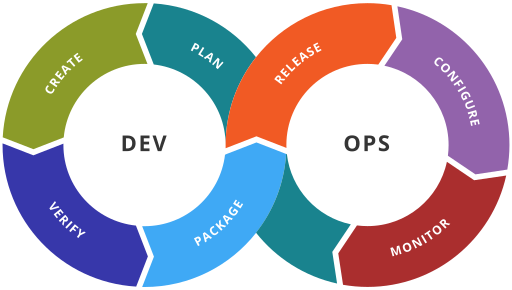

DevOps ToolChain, WikiPedia, CC BY-SA 4.0 Why Hardening Hardening, as I define it, is the process of applying best practices ...

There was a time when setting up a Continuous Integration server took a lot of work. I personally have spent ...