Ubuntu 24.04 LTS, the “Noble Numbat”, has arrived, and I wanted to get Greenbone OpenVAS up and running on it. ...

Ubuntu 24.04 LTS, the “Noble Numbat”, has arrived, and I wanted to get Greenbone OpenVAS up and running on it. ...

Different processors and instruction set architectures have always fascinated me. As a child of the late 70s and 80s I ...

A few years back I purchased a SiFive HiFive1 Rev B board to join in the RISC-V revolution. In this ...

Troubleshooting digital signals and protocols such as Serial Peripheral Interface (SPI) and Inter-Integrated Circuit (I2C) can be notoriously difficult. The ...

You may have noticed that Raspberry Pi 4s are a bit hard to come by these days. The all-knowing Google ...

In late March 2020 Apple purchased the Dark Sky app, and along with it the Dark Sky API. No longer ...

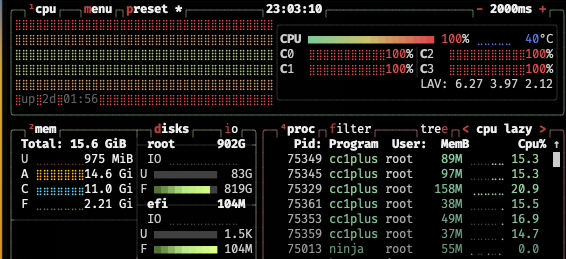

One of the reasons I took the plunge and bought an M1-based Mac is to test out its performance and ...

Hey kids! Today we’re going to take a look at the SiFive HiFive1 Rev B and Freedom Metal I2C API. ...

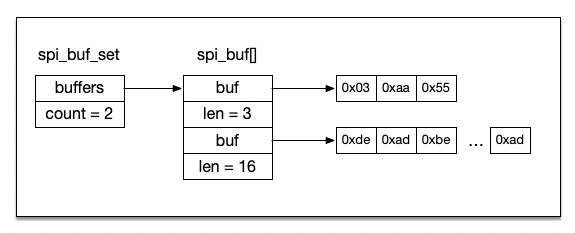

In our last post we looked at the GPIO pins of the SiFive HiFive1 Rev B board, and in this ...

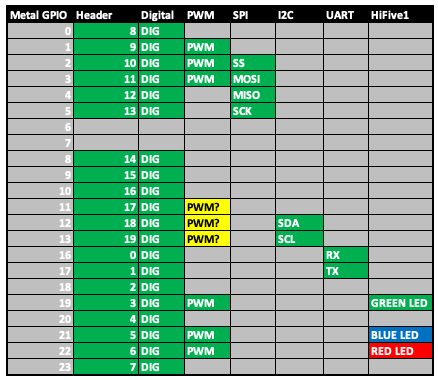

Let’s make use of the HiFive1 Rev B schematics to map out the GPIO controller device pins. Of particular interest ...