I wrote Part I of the Developing on a Mac series to provide a foundation upon which to build a ...

I wrote Part I of the Developing on a Mac series to provide a foundation upon which to build a ...

Different processors and instruction set architectures have always fascinated me. As a child of the late 70s and 80s I ...

A few years back I purchased a SiFive HiFive1 Rev B board to join in the RISC-V revolution. In this ...

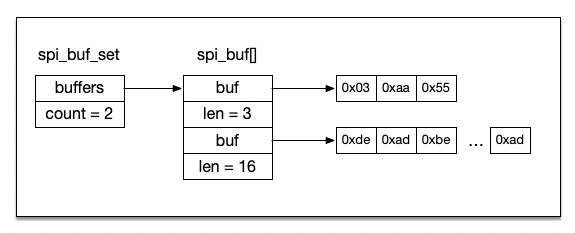

Troubleshooting digital signals and protocols such as Serial Peripheral Interface (SPI) and Inter-Integrated Circuit (I2C) can be notoriously difficult. The ...

You may have noticed that Raspberry Pi 4s are a bit hard to come by these days. The all-knowing Google ...

I wrote Part I of this series to provide a foundation upon which to build a collection of minimal guides ...

I wrote Part I of this series to provide a foundation upon which to build a collection of minimal guides ...

This is part one in a series of posts for developing on a Mac. Note the distinction between developing on ...

Growing up as a kid my family called Chex Mix “Texas Trash”. With every passing year the ingredients became more ...

In late March 2020 Apple purchased the Dark Sky app, and along with it the Dark Sky API. No longer ...